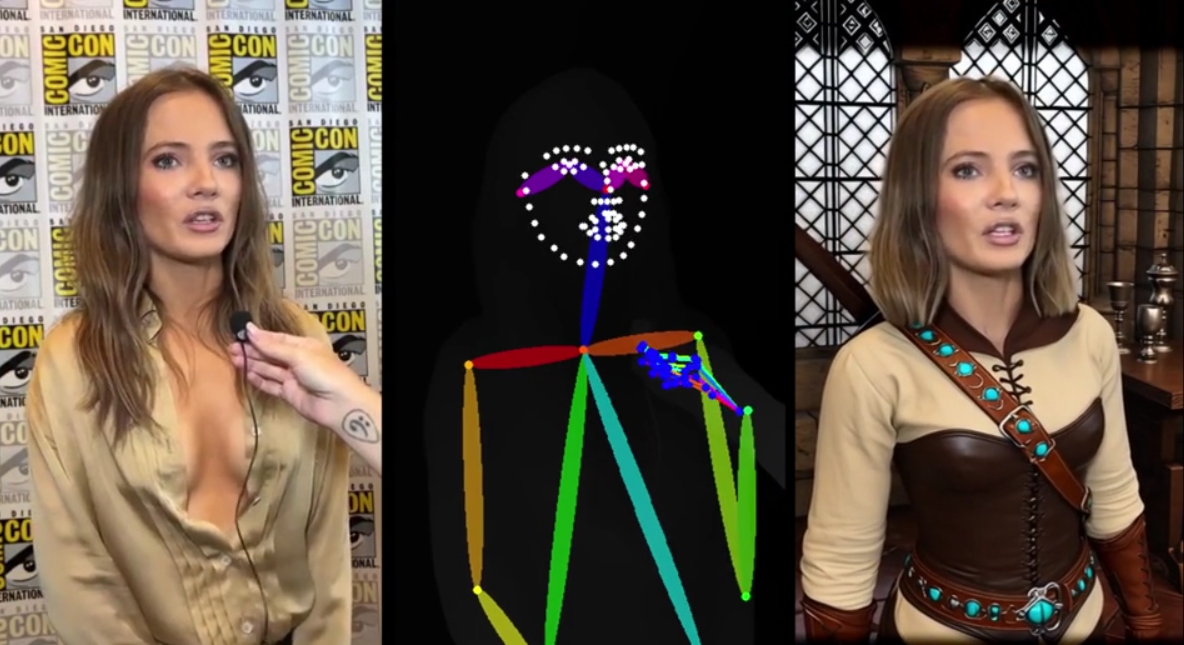

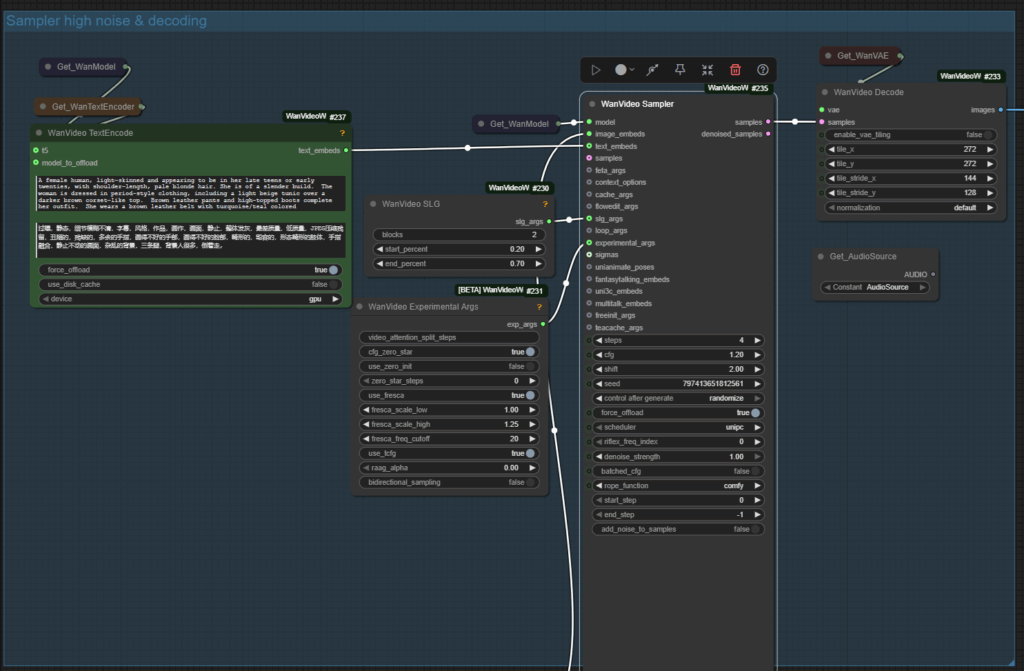

In this guide, we’ll break down a workflow that combines Florence2, WAN 2.1 VACE, and smart masking techniques to composite someone onto an AI-generated The Witcher 3 inspired body.

What This Workflow Does

Download the workflow v1 here. Without arm tracking.

Download the workflow v2 here. With arm tracking.

Link to Reddit thread here.

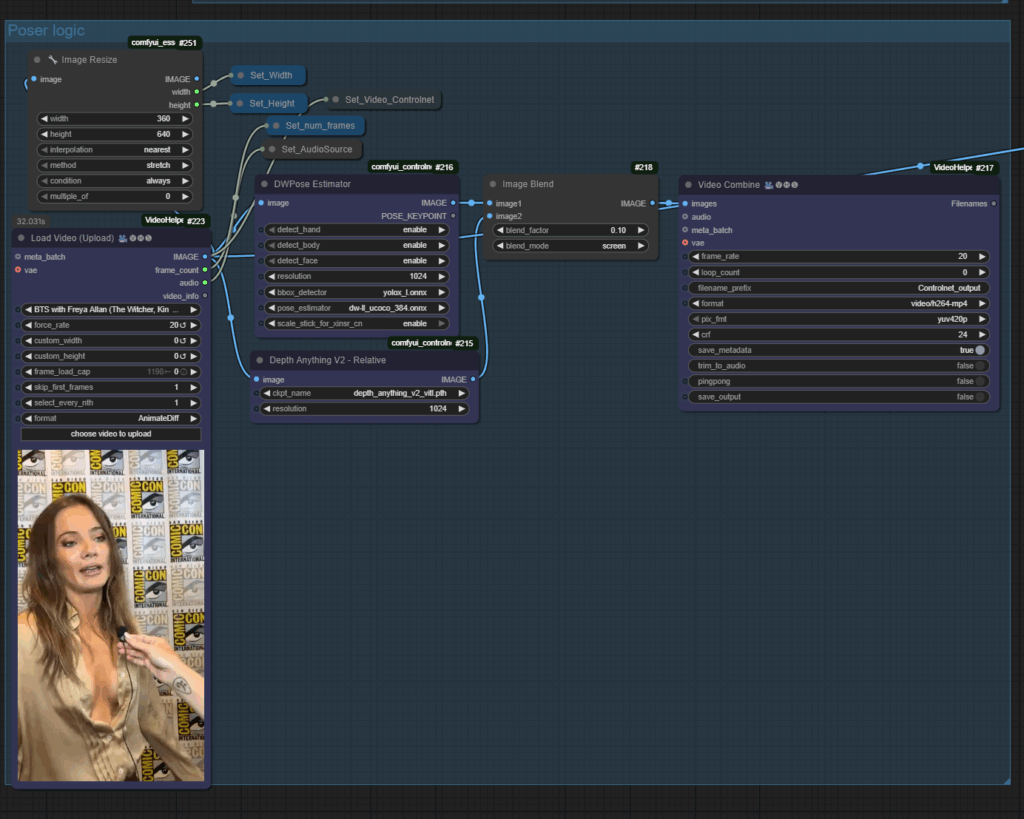

Arm movement tracking.

- The reference video provides the motion data (head turns, expressions, movement) and we extract that data to pass off to Florence2

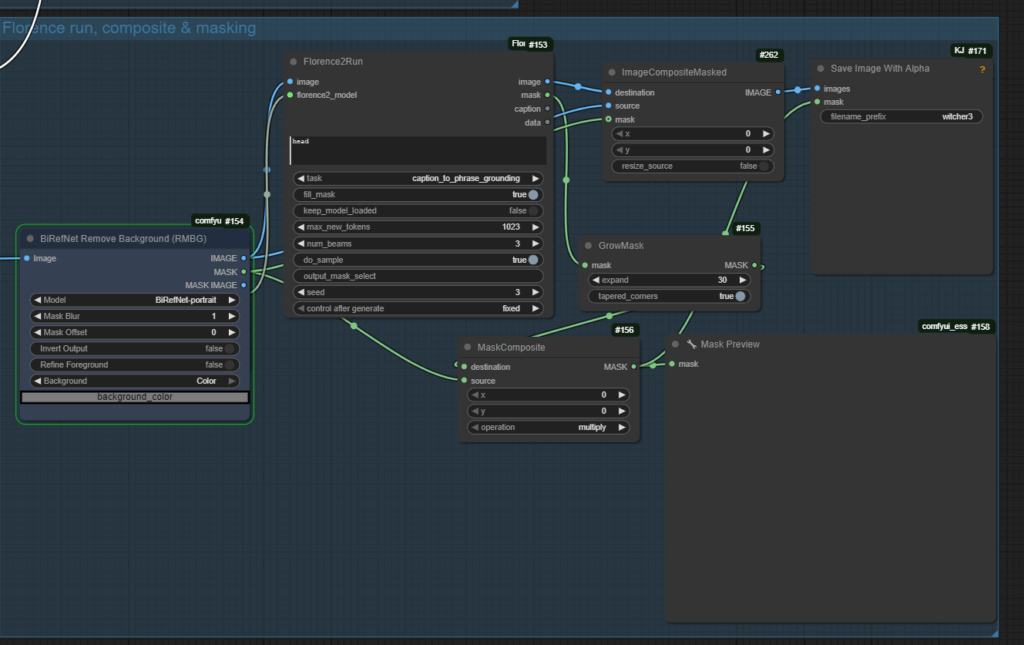

- Florence2 detects and masks the person’s head from the source video

- Florence2 is run in caption-to-phrase grounding mode with the prompt

"head".

- Florence2 is run in caption-to-phrase grounding mode with the prompt

- Remove Background and Refine Mask

- BiRefNet-RMBG (background remover) is applied.

- The mask is expanded and cleaned with GrowMask and MaskComposite.

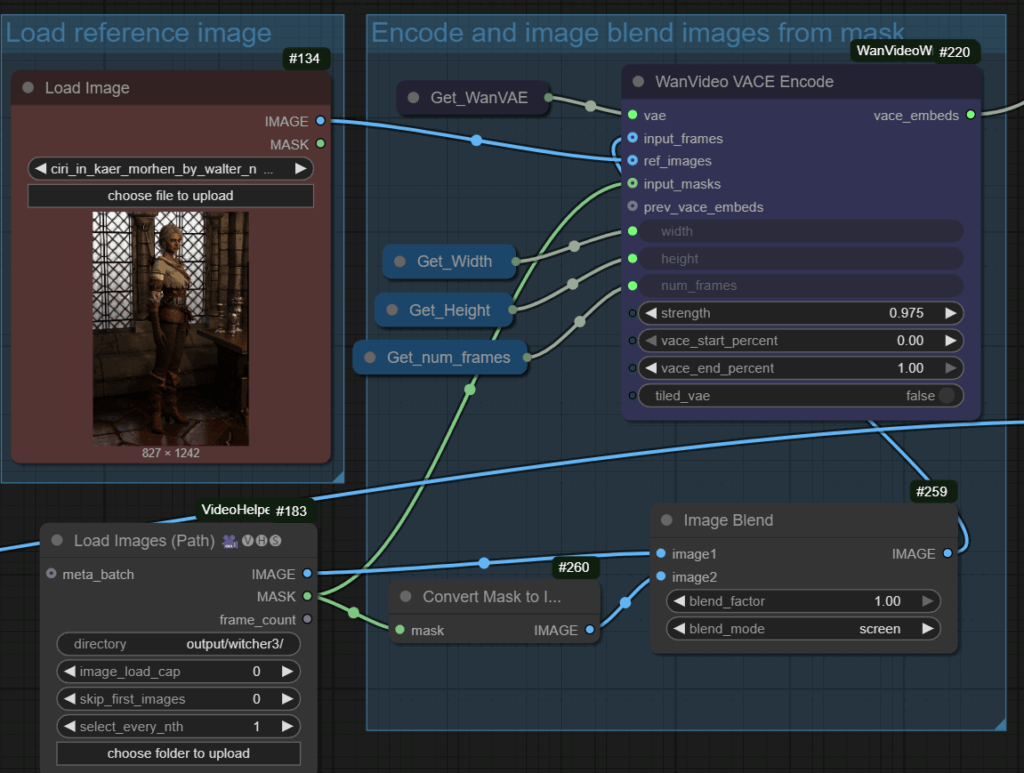

- Apply your reference photo

- A still image from The Witcher 3 is loaded.

- WAN VACE encodes this as the reference style and clothing.

- Combine WAN text and VACE embeds, then sit back and wait for generation

- RTX 4090 it took about 40 minutes.

- RTX PRO 6000 took 4 minutes.

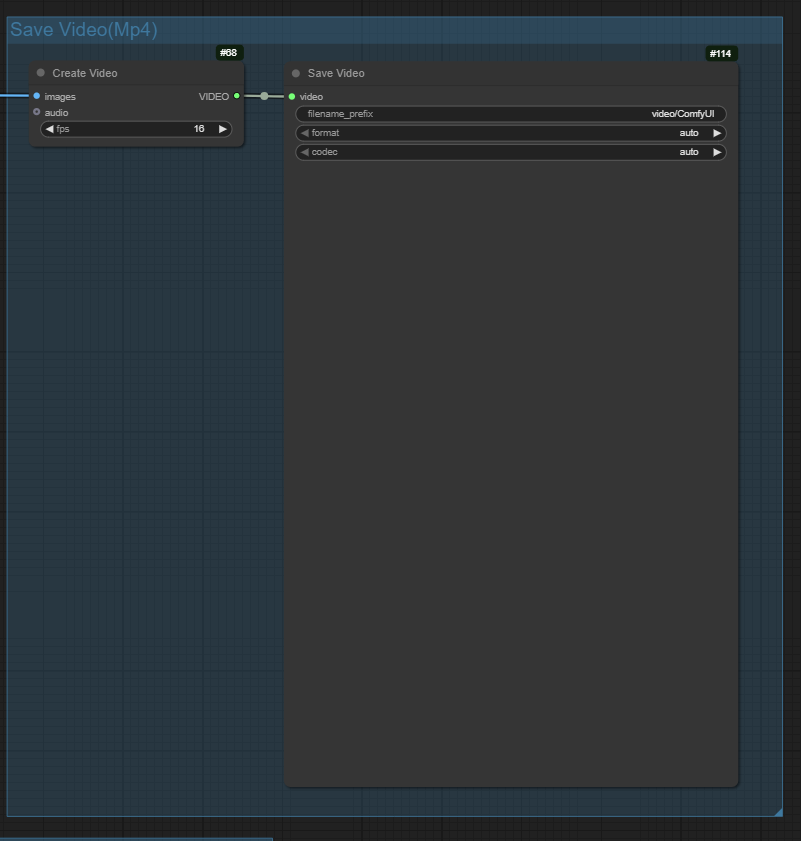

- Save and review!

Creative Applications

- Fan Edits

- Place yourself into anything!

- Virtual Production

- Generate quick previews of how an actor can look in full costume without expensive shoots.

- Cosplay Visualization

- See your cosplay concept in motion before you build it.

Final Thoughts

Whether you’re a fan editor, digital artist, or just experimenting, this is a glimpse into the future of video generation.