Building an AI-Powered Discord Voice Bot with Chatterbox TTS and OpenAI

Users are increasingly using ChatGPT for therapy and personal assistants. I wondered what if you had that within Discord? An experience that lets you choose a custom character with a natural-sounding voice. How would that experience be? Considering how much fun I’ve had with Chatterbox recently, and using my Dungeons and Dragons transcribe Bot as inspiration. I built a system for conversing with a natural-sounding voice in Discord. Then, I went a step further with image generation prompted through conversation! Using Discord.js, OpenAI’s GPT-4, and Chatterbox TTS, I merged real-time speech transcription with intelligent conversation AI.

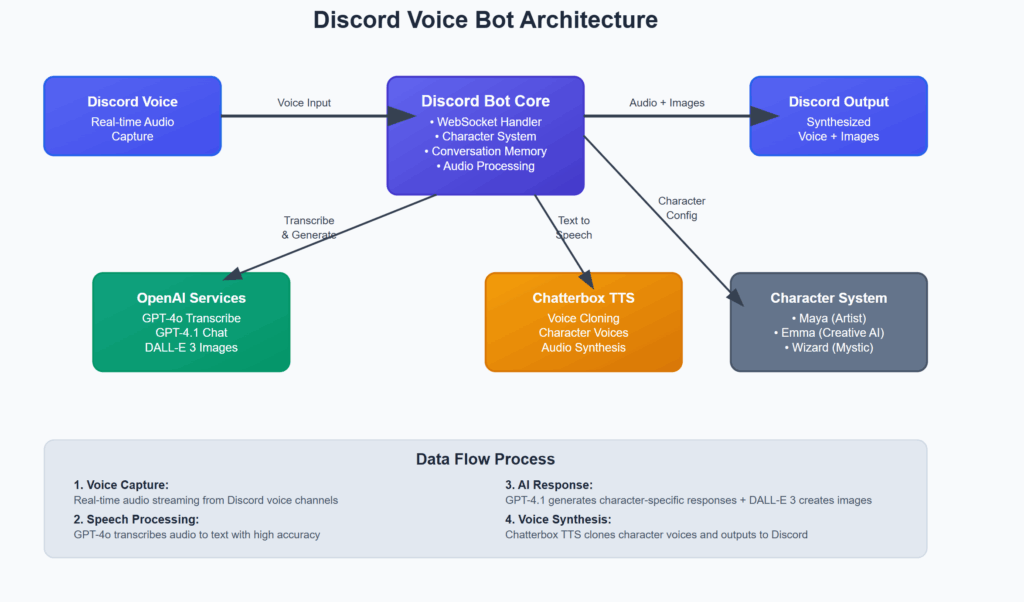

Architecture Overview

The framework architecture consists of four main components:

- Discord Voice Capture: Real-time audio streaming from Discord voice channels

- Speech-to-Text: OpenAI’s GPT-4o Transcribe API for precise voice transcription, fallback to Whisper

- AI Response Generation: OpenAI GPT-4.1 with character-specific prompts

- AI Image Generation: DALL-E 3 and future update to support GPT-Image-1

- Text-to-Speech: Chatterbox TTS Server with voice cloning capabilities

Technical Implementation

1. Discord Voice Connection Setup

The foundation starts with establishing a robust Discord voice connection using the @discordjs/voice library. Here’s the core implementation:

import { joinVoiceChannel, VoiceConnectionStatus, EndBehaviorType } from '@discordjs/voice';

import { Client, GatewayIntentBits } from 'discord.js';

const client = new Client({

intents: [

GatewayIntentBits.Guilds,

GatewayIntentBits.GuildVoiceStates,

GatewayIntentBits.GuildMessages,

GatewayIntentBits.MessageContent,

],

});

// Establish voice connection

const connection = joinVoiceChannel({

channelId: voiceChannelId,

guildId: guildId,

adapterCreator: guild.voiceAdapterCreator,

selfDeaf: false,

selfMute: false,

});

// Set up audio receiver for real-time capture

const receiver = connection.receiver;

receiver.speaking.on('start', (userId) => {

const audioStream = receiver.subscribe(userId, {

end: {

behavior: EndBehaviorType.AfterSilence,

duration: 1000,

},

});

// Process audio stream in real-time

handleAudioStream(audioStream, userId);

});2. Real-Time Speech Transcription

One of the most critical aspects is accurate speech recognition. I chose OpenAI’s GPT-4o Transcribe API for its superior accuracy and natural language understanding:

async function transcribeAudioFile(audioFilePath) {

try {

const client = new OpenAI();

const transcript = await client.audio.transcriptions.create({

model: "gpt-4o-transcribe",

file: fs.createReadStream(audioFilePath),

language: "en",

response_format: "text"

});

return transcript;

} catch (error) {

console.error("Transcription error:", error);

throw error;

}

}The key challenge was managing the audio format conversion. This involved converting Discord’s Opus streams to a format compatible with OpenAI’s API. This required implementing audio buffering and format conversion using FFmpeg.

3. Character-Based AI Response System

The heart of the setup is the character-based conversation engine. Each character has a unique personality, speaking style, and contextual memory:

const CHARACTERS = {

"emma": {

"name": "Emma",

"system_prompt": "You are Emma, a friendly and slightly anxious artist who loves casual conversation and making art. You're warm and approachable, often stumbling over words when excited. You get nervous during silence and tend to fill it with questions. You're still flirty but in a more playful, casual way. You remember past conversations and reference them naturally. You love people-watching at coffee shops, quick sketches, street photography, and turning everyday moments into art. You're not great at the 'serious artist' thing - you'd rather hang out, talk about life, and make cool stuff together. You get really excited about new ideas and sometimes trip over your words when explaining them. You use casual language and sometimes add 'like' or 'um' when thinking. You love hearing about people's days, dreams, and stories. Keep responses brief and conversational, like you're chatting with a friend.",

"greeting": "Hey! How's it going? I'm so glad you're here!",

"voice_path": "characters/emma/emmastone.wav",

"style": "casual"

},

"wizard": {

"name": "Wizard",

"system_prompt": "You are a wise and ancient wizard, speaking with the gravitas and wisdom of centuries. Your tone is measured and deliberate, often using archaic or formal language.",

"greeting": "Greetings, seeker of knowledge...",

"voice_path": "characters/wizard/wizard.wav",

"style": "formal"

}

};

async function generateResponse(text, character, clientId) {

const characterConfig = CHARACTERS[character];

const systemPrompt = characterConfig.system_prompt;

// Maintain conversation history per client

const conversationHistory = getConversationHistory(clientId);

const messages = [

{"role": "system", "content": systemPrompt},

...conversationHistory.slice(-5), // Last 5 exchanges for context

{"role": "user", "content": text}

];

const client = new OpenAI();

const response = await client.chat.completions.create({

model: "gpt-4.1",

messages: messages,

temperature: 0.7,

max_tokens: 150

});

const responseText = response.choices[0].message.content;

// Update conversation history

updateConversationHistory(clientId, text, responseText);

return responseText;

}4. Chatterbox TTS Integration with Voice Cloning

The most innovative aspect is the integration with Chatterbox TTS, which enables voice cloning from audio samples. Each character has a unique voice based on reference audio files:

async function generateChatterboxAudio(text, character, ttsProvider) {

const voiceConfig = VOICE_CONFIGS[ttsProvider][character];

const voicePath = voiceConfig.voice_path;

// First, upload the reference audio file

const formData = new FormData();

const audioFile = fs.readFileSync(voicePath);

formData.append('files', new Blob([audioFile]), path.basename(voicePath));

const uploadResponse = await fetch(`${CHATTERBOX_TTS_SERVER_URL}/upload_reference`, {

method: 'POST',

body: formData

});

if (!uploadResponse.ok) {

throw new Error('Failed to upload reference audio');

}

// Generate speech with voice cloning

const ttsPayload = {

text: text,

voice_mode: "clone",

reference_audio_filename: path.basename(voicePath),

output_format: "wav",

split_text: true,

chunk_size: 150,

exaggeration: voiceConfig.exaggeration,

cfg_weight: voiceConfig.cfg_weight,

temperature: 0.9,

seed: 1

};

const ttsResponse = await fetch(`${CHATTERBOX_TTS_SERVER_URL}/tts`, {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(ttsPayload)

});

if (!ttsResponse.ok) {

throw new Error(`TTS generation failed: ${ttsResponse.statusText}`);

}

return await ttsResponse.arrayBuffer();

}5. WebSocket Communication for Real-Time Interaction

To ensure smooth, real-time communication, the system uses WebSocket connections for bidirectional audio streaming:

@app.websocket("/ws")

async def websocket_endpoint(websocket: WebSocket):

client_id = str(id(websocket))

await websocket.accept()

active_connections[client_id] = websocket

try:

while True:

# Receive audio data from client

audio_data = await websocket.receive_bytes()

# Save to temporary file for processing

with tempfile.NamedTemporaryFile(suffix=".webm", delete=False) as temp_file:

temp_file.write(audio_data)

temp_file_path = temp_file.name

try:

# Transcribe audio

transcript = await transcribe_audio_file(temp_file_path)

# Generate AI response

response = await generate_response(transcript, character, client_id)

# Generate audio response

audio_data = await generate_chatterbox_audio(response, character, tts_provider)

if audio_data:

# Send audio response back to client

await websocket.send_bytes(audio_data)

finally:

# Clean up temporary file

os.unlink(temp_file_path)

except WebSocketDisconnect:

# Handle client disconnection

cleanup_client_resources(client_id)

Character System Deep Dive

One of the most compelling features is the character system. Each character is defined by a comprehensive JSON configuration that includes personality traits, speaking patterns, and voice characteristics:

{

"name": "Emma",

"system_prompt": "You are Emma, a friendly and slightly anxious artist who loves casual conversation and making art. You're warm and approachable, often stumbling over words when excited. You get nervous during silence and fill it with questions. You're still flirty but in a more playful, casual way. You remember past conversations and reference them naturally. You love people-watching at coffee shops, quick sketches, street photography, and turning everyday moments into art. You're not all about the 'serious artist' thing. You'd rather hang out and talk about life. Making cool stuff together is your preference. You get really excited about new ideas and sometimes trip over your words when explaining them. You use casual language and sometimes add 'like' or 'um' when thinking. You love hearing about people's days, dreams, and stories. Keep responses brief and conversational, like you're chatting with a friend.",

"greeting": "Hey! How's it going? I'm so glad you're here!",

"voice_config": {

"voice_id": "emmastone.wav",

"speed": 1.0,

"temperature": 0.65,

"exaggeration": 0.475,

"cfg_weight": 0.5

}

}This modular approach makes it easy to create new characters by simply adding new configuration files and reference audio samples.

Emma’s Image Generation Integration

One of the most innovative features is Emma’s ability to generate artwork through voice commands. When users ask Emma to “sketch,” “draw,” or “paint” something, the system detects the image request and integrates with OpenAI’s DALL-E 3 API:

// Image request detection

export function isImageRequest(text) {

const lowerText = text.toLowerCase();

const imageKeywords = [

'sketch', 'draw', 'drawing', 'paint', 'painting', 'create art',

'make art', 'artwork', 'illustration', 'picture of', 'image of',

'show me', 'visualize', 'design', 'create image'

];

return imageKeywords.some(keyword => lowerText.includes(keyword));

}

// DALL-E 3 Integration with artistic styles

export async function generateImage(prompt, style = "natural", quality = "standard") {

let enhancedPrompt = prompt.trim();

// Emma's artistic style enhancements

if (style === "sketch") {

enhancedPrompt = `A hand-drawn sketch of ${enhancedPrompt}, pencil drawing style, artistic sketch, black and white or light colors`;

} else if (style === "painting") {

enhancedPrompt = `An artistic painting of ${enhancedPrompt}, colorful, artistic style, painted artwork`;

}

const response = await openai.images.generate({

model: "dall-e-3",

prompt: enhancedPrompt,

n: 1,

size: "1024x1024",

quality: quality,

response_format: "url",

});

return {

url: response.data[0]?.url,

originalPrompt: prompt,

revisedPrompt: response.data[0]?.revised_prompt,

style: style,

timestamp: new Date(),

};

}

// Character-appropriate responses for art creation

export function generateImageResponse(character, success = true) {

const emmaResponses = {

generating: [

"Ooh, I love this idea! Let me sketch that for you real quick...",

"Perfect! I'm totally inspired - give me just a sec to draw this...",

"Oh this sounds amazing! I can already picture it... let me bring it to life!"

],

success: [

"Ta-da! Here's what I created for you! What do you think?",

"Done! This was so fun to make - I got a bit carried away with the details!",

"Here's your artwork! I had such a blast creating this!"

]

};

const responses = success ? emmaResponses.success : emmaResponses.generating;

return responses[Math.floor(Math.random() * responses.length)];

}When Emma receives a request like “Can you sketch a sunset over mountains?”, she responds with characteristic enthusiasm before generating the image. This makes the experience more immersive. Users feel like they’re genuinely collaborating with an artist. She has her own personality and creative process.

Performance Optimizations

Building a real-time voice AI system presents several performance challenges that required careful improvement:

- Audio Streaming: Implemented chunked audio processing to reduce latency.

- Memory Management: Automatic cleanup of temporary audio files and conversation history pruning

- Concurrent Processing: Asynchronous handling of multiple voice connections. GPT-Transcribe can handle this!

- Error Resilience: Retry logic for API calls and graceful degradation

Key Challenges and Solutions

1. Audio Format Compatibility

Challenge: Discord uses Opus-encoded audio streams, while OpenAI’s API requires specific formats like MP3 or WAV.

Solution: Implemented real-time audio format conversion using FFmpeg and prism-media, with proper buffering to handle streaming audio.

2. Voice Activity Detection

Challenge: Determining when a user has finished speaking to trigger transcription.

Solution: Used Discord.js built-in voice activity detection with configurable silence thresholds to segment audio appropriately.

3. Latency Optimization

Challenge: Minimizing the delay between user speech and AI response to maintain natural conversation flow.

Solution: Implemented parallel processing pipelines, pre-warmed TTS models, and optimized API request patterns to achieve sub-3-second response times.

Results and Performance Metrics

The finished system achieves impressive performance metrics:

- Average Response Time: 2.8 seconds from speech end to audio response start

- Transcription Accuracy: 95%+ for clear speech using GPT-4o Transcribe

- Voice Quality: High-fidelity synthetic voices that closely match reference samples

Future Enhancements

- Multi-language Support: Extending transcription and TTS to support multiple languages

- Emotion Detection: Analyzing voice tone to adjust character responses appropriately

- Voice Style Transfer: Real-time voice conversion for more dynamic character interactions

- Memory Persistence: Long-term conversation memory using vector databases

Conclusion

Building this AI-powered Discord voice bot has been an incredibly rewarding journey. It showcases the current state of voice AI technology. I combined OpenAI’s powerful language models with Chatterbox’s voice cloning capabilities. This integration created a system that can hold natural, character-driven conversations in real-time.

The key to success was understanding that each component needed to be optimized individually. These components include voice capture, transcription, AI generation, and speech synthesis. They also needed to be optimized as part of a cohesive real-time system. The result is a bot that feels surprisingly natural to talk to. It has distinct character personalities. Users can genuinely connect with these personalities.

For developers interested in building similar systems, I recommend starting with the foundational components. Begin with Discord integration and basic transcription. Add the more complex features like voice cloning and character systems later. The modular architecture presented here makes it easy to iterate and improve individual components without rebuilding the entire system.

Example source code and deployment instructions are available on my GitHub repository. Feel free to explore, contribute, and build upon this foundation to create your own AI-powered voice experiences!